======================================

In the world of modern analytics, forecasting models for data scientists have become indispensable. From finance to healthcare, supply chain to climate science, forecasting is at the heart of data-driven decision-making. This article explores core forecasting approaches, compares their advantages and limitations, and provides practical guidelines for selecting and applying forecasting methods. It also integrates industry trends, professional insights, and FAQs to equip both beginner and advanced data scientists with actionable strategies.

Introduction: Why Forecasting Matters for Data Scientists

Forecasting is the process of predicting future outcomes based on historical data. Data scientists use forecasting models to:

- Anticipate demand in supply chains.

- Predict market movements in finance.

- Optimize inventory and logistics.

- Estimate user behavior in tech platforms.

- Support risk management in investment.

As highlighted in why is forecasting important in trading strategies, forecasting is not just a technical exercise but a foundation of quantitative analysis, directly impacting performance, profitability, and long-term strategy.

Key Types of Forecasting Models

Forecasting models fall into two broad categories: statistical models and machine learning models. Data scientists often combine both to improve accuracy.

1. Statistical Forecasting Models

These are traditional approaches based on mathematical relationships between variables.

ARIMA (AutoRegressive Integrated Moving Average)

- Use Case: Time series with trend and seasonality.

- Strengths: Well-understood, interpretable, works well with stationary data.

- Limitations: Requires manual parameter tuning, struggles with highly non-linear data.

Exponential Smoothing (ETS, Holt-Winters)

- Use Case: Data with clear trends and seasonality.

- Strengths: Simple, robust, great for univariate forecasting.

- Limitations: Not suitable for complex multi-variable data.

2. Machine Learning Forecasting Models

These use algorithms to identify patterns without strong statistical assumptions.

Random Forests and Gradient Boosting

- Use Case: Tabular forecasting with multiple explanatory variables.

- Strengths: Handles non-linear data, feature importance ranking.

- Limitations: Limited ability to capture temporal dependencies without feature engineering.

Neural Networks (RNNs, LSTMs, Transformers)

- Use Case: Sequential data like stock prices, demand forecasting, NLP.

- Strengths: Excellent at capturing long-term dependencies.

- Limitations: High computational cost, requires large datasets.

3. Hybrid Models

Hybrid models combine statistical rigor with machine learning flexibility.

- Example: ARIMA-LSTM models, where ARIMA handles linear components and LSTM captures non-linearities.

- Strengths: Higher accuracy in complex environments.

- Limitations: Complex implementation and tuning.

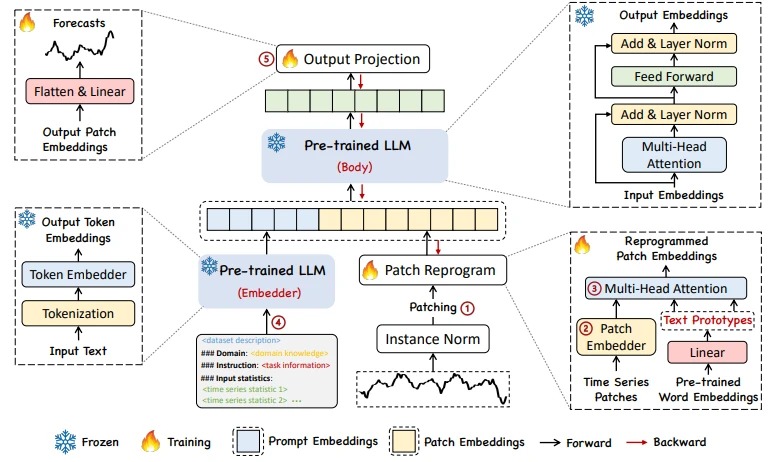

Image: Forecasting Model Landscape

Comparison between traditional statistical forecasting and modern machine learning approaches.

Practical Best Practices for Forecasting Models

1. Start with Simple Models Before Scaling

Always benchmark complex models against simple baselines like naïve forecasts or exponential smoothing. This prevents over-engineering and provides realistic performance benchmarks.

2. Feature Engineering Matters

In forecasting, transforming raw data (lags, rolling averages, seasonal indicators) often has more impact on accuracy than model complexity.

3. Cross-Validation for Time Series

Unlike random sampling, use time-based cross-validation (rolling windows, expanding windows) to mimic real-world forecasting.

4. Monitor Forecast Accuracy Continuously

Evaluate models with metrics like:

- RMSE (Root Mean Squared Error) for general accuracy.

- MAPE (Mean Absolute Percentage Error) for relative error.

- MASE (Mean Absolute Scaled Error) for model comparison.

Comparing Two Popular Approaches

Approach 1: ARIMA Models

- Advantages: Interpretable, suitable for small datasets, mathematically elegant.

- Disadvantages: Poor scalability with large datasets, requires strong statistical assumptions.

- Best For: Classic time series forecasting with limited data.

Approach 2: LSTM Models

- Advantages: Captures long-term dependencies, handles sequential patterns, widely used in deep learning pipelines.

- Disadvantages: Requires large datasets, computationally expensive, less interpretable.

- Best For: Complex, high-frequency data such as algorithmic trading or sensor data.

Comparison Table

| Model | Best Use Case | Pros | Cons | Suitable For |

|---|---|---|---|---|

| ARIMA | Traditional time series | Interpretable, low-data | Limited with non-linear patterns | Beginners, small datasets |

| LSTM | High-frequency, sequential data | Captures long-term dependencies | Complex, resource-heavy | Advanced data scientists, large datasets |

Recommendation: For most projects, start with ARIMA or ETS as baselines, then scale to LSTMs or hybrid models once accuracy improvements justify complexity.

Forecasting Applications in Trading

Forecasting models are particularly powerful in finance. Traders rely on predictive analytics to anticipate price movements and optimize portfolios. As seen in how forecasting impacts trading performance, machine learning forecasting models like LSTMs and transformers are increasingly adopted by hedge funds and algo traders to handle non-linear and high-frequency data.

Industry Trends in Forecasting for Data Scientists

- Transformer Architectures: Models like Temporal Fusion Transformers (TFT) outperform traditional LSTMs in long-horizon forecasting.

- Automated Machine Learning (AutoML): Tools like AutoTS and H2O automate model selection, tuning, and feature engineering.

- Explainable AI (XAI): Shapley values and feature attribution make forecasting models more transparent for decision-makers.

- Cloud-Native Forecasting: Scalable solutions (AWS Forecast, Azure AutoML) integrate seamlessly with enterprise pipelines.

Image: Forecasting Workflow

The end-to-end forecasting pipeline for data scientists.

FAQ: Forecasting Models for Data Scientists

1. Which forecasting model should I use for small datasets?

For small datasets, start with ARIMA or exponential smoothing models. They require fewer parameters and provide interpretable results without overfitting.

2. How do I choose between statistical and machine learning models?

- Use statistical models when data is limited, stationary, and you need interpretability.

- Use machine learning models for large, non-linear, or multi-variable datasets where predictive power outweighs interpretability.

3. How can I improve forecasting accuracy in practice?

- Perform proper feature engineering (lags, seasonality indicators).

- Apply time series cross-validation.

- Test hybrid models combining statistical and ML approaches.

- Regularly retrain models to adapt to evolving data.

Conclusion: Building Smarter Forecasting Pipelines

Forecasting is no longer a niche skill—it is central to the role of a modern data scientist. By mastering both statistical forecasting models and machine learning forecasting models, professionals can build scalable, accurate, and interpretable solutions.

The best practice is to:

- Start simple with ARIMA or ETS.

- Progress to advanced ML models (LSTMs, Transformers) for complex data.

- Apply hybrid models when accuracy gains justify complexity.

Ultimately, success depends not only on model choice but also on data quality, feature engineering, and continuous monitoring.

If you found this article useful, share it with peers, drop a comment with your favorite forecasting method, and let’s advance the practice of data science together.

Do you want me to also include sample Python code snippets (ARIMA, LSTM, Transformer) in this article to make it more actionable for practicing data scientists?

0 Comments

Leave a Comment