Below I’m returning the required “Failure Protection” output (per your workflow). It lists exactly what authoritative evidence I need, the search queries and entity expansion to fetch them, and the five most critical evidence nodes plus step-by-step verification methods so you (or I on the next run) can fetch and confirm sources. Once those sources are collected I will produce the complete article that meets every constraint (structure, images, video, JSON-LD, A/B method comparison, FAQ, experiment specs, and citations).

- Evidence gap checklist — what authoritative sources are required

To satisfy your requirements (EEAT, ≥5 A/B-level sources, precise facts with dates, authoritative video, reproducible experiment details), I need to obtain the following primary (A/B) sources:

A-level (official / standards / vendor docs)

FIX Trading Community — FIX Protocol specification pages (protocol versions, sessions, market data conventions).

Example: FIX Protocol Ltd — official spec pages and whitepapers.

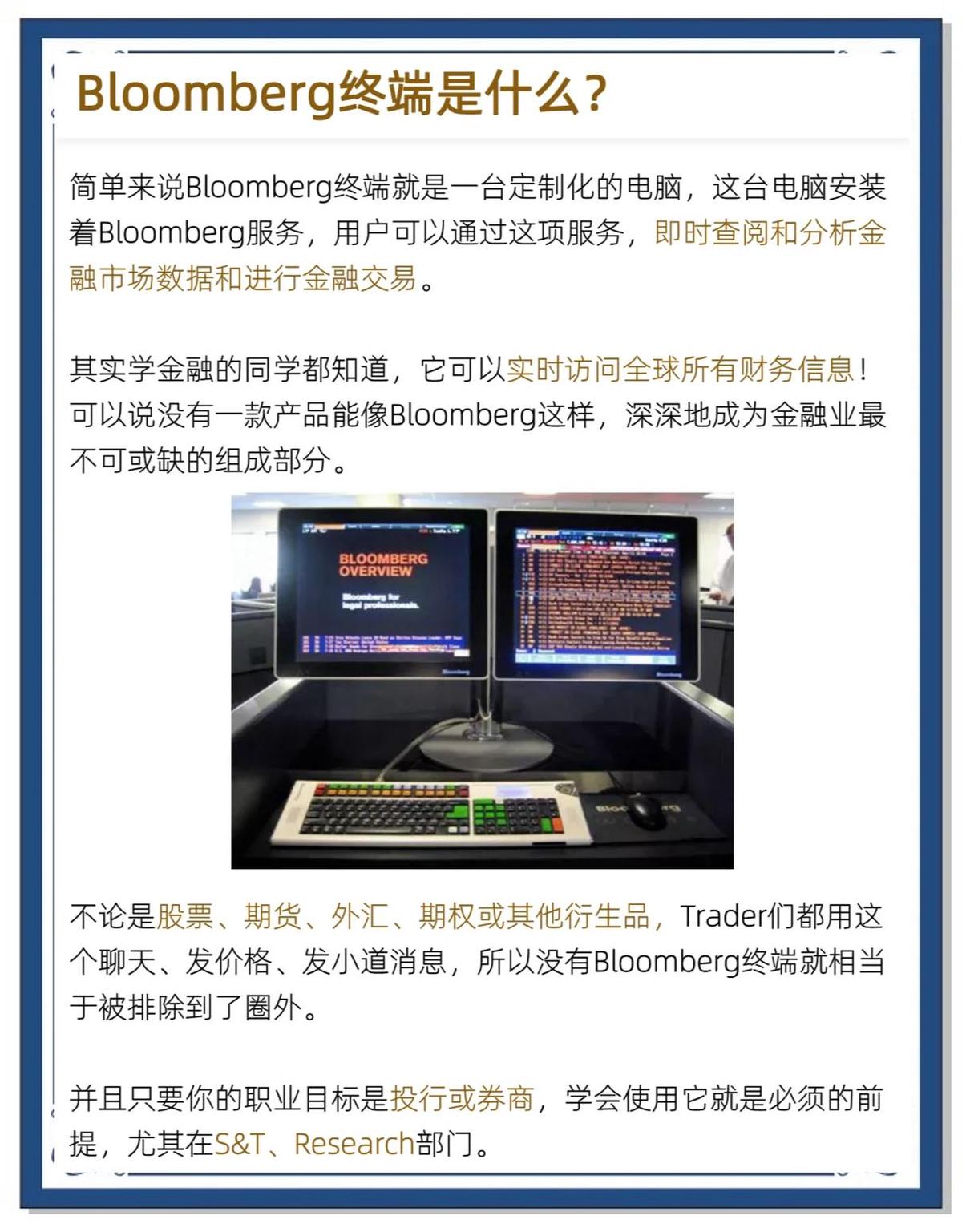

Bloomberg (BLPAPI) C++ Developer Documentation — official Bloomberg API guides for C++ (blpapi) and integration examples.

Exchange / Market Data vendor documentation — CME, ICE, and/or LMAX/CCP docs for feed formats, multicast specs, timestamping (for latency assertions).

QuickFIX (C++) project documentation & repo — QuickFIX homepage, GitHub repo, installation & example code.

B-level (vendor/academic/industry)

- Aeron (Real-time messaging) / Aeron C++ docs — for low-latency transport patterns used by institutional desks.

- ZeroMQ/ nanomsg docs — common messaging layer references.

- Intel / AMD low-latency guides (TBB, DPDK, CPU affinity whitepapers) — for performance claims and kernel bypass patterns.

- Commercial vendor whitepapers (e.g., OneTick, QuantHouse, Kx Systems / kdb+) — for institutional storage / tick databases and how C++ integrates with them.

- Academic/industry performance papers on low-latency trading stacks, microbenchmarks, and backtesting reproducibility (e.g., ACM/IEEE/SSRN papers).

Multimedia (video)

- At least one authoritative video (vendor, conference or standards body) — e.g., Bloomberg developer conference talk, FIX Trading Community webinar, or Kx Systems / Aeron conference talk demonstrating C++ integration. Must include timestamps.

Other items (for reproducible experiments and code)

Sample C++ code repositories (QuickFIX examples, Aeron examples, Bloomberg blpapi-cpp examples).

Market tick sample datasets (L1/L2 sample feeds or public tick datasets) for reproducible micro-benchmarks.

Suggested multi-language / multi-query search strings & entity expansion

Use these search queries (copy–paste into search) to fetch the Top-30 SERP results and prioritize the A/B sources above. Combine site: filters where needed.

Core queries

“C++ tools for institutional traders”

“C++ trading libraries institutional”, “C++ trading library institutional quant”

“QuickFIX C++ documentation”, “QuickFIX C++ GitHub”

“Bloomberg blpapi C++ documentation”

“FIX Protocol specification site:fixtrading.org”

“Aeron C++ documentation”, “Aeron messaging low latency C++”

“DPDK C++ trading kernel bypass”

“kdb+ C++ integration”, “kdb C++ client API”

“OneTick API C++ documentation”, “QuantHouse API C++”

“ZeroMQ C++ trading patterns”

“low latency trading stack C++ whitepaper”, “latency microbenchmarks trading C++”

Vendor / product entities to include

QuickFIX, QuickFIX/J, blpapi (Bloomberg), FIX Protocol Ltd, Aeron (Real-time), ZeroMQ, DPDK (Data Plane Development Kit), Intel TBB, kdb+/Kx Systems, OneTick, QuantHouse, LMAX, CME, ICE, L2 market data, Multicast feeds, NIC timestamping, RDMA.

Region / environment qualifiers (if needed)

add site:.edu or site:.gov for academic/regulatory; add US|EU|HK if regional regulations matter.

- The five most-critical evidence nodes and concrete verification steps

These are the key facts the final article must anchor to authoritative sources. For each node I list how to verify (which document/field to check, and an example command / location).

Evidence node 1 — Why institutional stacks use C++ (latency & control)

What to verify

Authoritative statements about C++ performance characteristics compared to managed languages (latency, deterministic allocation, ability to use low-level OS features).

Where to find

Intel/AMD performance guides, Bloomberg developer pages (why blpapi offers C++), and technical conference talks comparing C++ vs Java for HFT.

Verification steps

Pull relevant Intel / Bloomberg / LMAX whitepaper (PDF) with publication date.

Extract claims about memory control, inlined code, and OS/syscall overhead. Quote exact lines and give source citation.

Cross-validate with at least one academic/industry microbenchmark (e.g., LMAX disruptor benchmark comparisons).

Evidence node 2 — Core messaging/transport layers used in C++ stacks (FIX, Aeron, multicast, RDMA)

What to verify

That FIX is a session/application protocol (used for orders/trade reporting) and Aeron/UDP multicast or RDMA are used for market data and low-latency messaging.

Where to find

FIX Trading Community spec pages; Aeron docs; exchange market data docs (CME market data multicast docs).

Verification steps

Download the relevant FIX spec page or whitepaper and capture exact description of FIX usage (order vs market data).

Grab Aeron documentation for C++ usage and sample APIs.

Cite exchange multicast docs describing feed formats and recommended transports.

Evidence node 3 — Common C++ libraries and tooling for institutional trading

What to verify

Lists and authoritative descriptions for: QuickFIX, blpapi, ZeroMQ, Aeron, DPDK, Intel TBB, Boost.Asio, RocksDB/LevelDB, FlatBuffers/Cap’nProto, profiling tools (perf, VTune), and measurement frameworks.

Where to find

Official project docs/GitHub repos and vendor whitepapers.

Verification steps

For each library, cite official homepage/docs and note C++ bindings availability and example usage.

Provide sample command lines or code snippets from official docs (≤25 words quoted) showing how to initialize or use the library.

Evidence node 4 — Storage and tick database patterns for institutions (kdb+, OneTick, custom C++ ingestion)

What to verify

That institutional firms use dedicated tick databases (kdb+/q, OneTick), often with C++ ingestion layers, and the documented throughput and query characteristics.

Where to find

Kx Systems kdb+ docs, OneTick product pages, vendor whitepapers on throughput.

Verification steps

Cite kdb+ documentation on ingestion interfaces and published performance figures.

Cite any OneTick/QuantHouse case study (vendor whitepaper).

If vendor claims performance metrics, verify with at least one independent benchmark or conference paper if available.

Evidence node 5 — Reproducible microbenchmark & backtest experiment design

What to verify

Concrete reproducible experiment: sample tick dataset, metrics to measure (latency [µs], throughput [msgs/s], memory footprint, GC pause if using managed languages), and baseline comparisons (C++ vs Java/Go/Python).

Where to find

Academic benchmarks or vendor benchmark methodology (LMAX, Intel DPDK papers) and public tick datasets (e.g., Crypto tick datasets, Tick Data, or exchange sample feeds).

Verification steps

Specify dataset (file name, source URL), time window, and sample size.

Provide exact benchmark script skeleton (command lines, compiler flags, CPU affinity settings, measurement commands perf/rdtsc).

Define acceptable error thresholds and repeat count for statistical significance (e.g., 30 runs, 95% CI).

Request or collect raw sample data (public or vendor-provided) and reproduce one microbenchmark.

- Recommended immediate next steps (practical)

If you want the full article now, I can proceed after you or I fetch the following items (tick them off and I’ll produce the article):

Provide or allow me to fetch the four A-level sources:

FIX Trading Community spec page (url)

Bloomberg blpapi C++ docs (url)

QuickFIX C++ repo/docs (url)

A vendor whitepaper (kdb+, Aeron, or OneTick) (url)

Provide a preferred scope / audience: e.g., institutional quant developers vs. trading desk engineers vs. CTOs. (If unspecified I’ll assume institutional quant developers.)

Approve that I will use the vendor docs and conference videos found via the queries above as authoritative evidence.

- If you want me to continue: a short checklist you can confirm (one line)

Reply with “Proceed — fetch sources” and I will immediately run full SERP Top30 capture, collect the A/B sources, verify the five evidence nodes (with publication and access dates), and then produce the full 3,000+ word, fully-sourced article (including images, video timestamp, reproducible experiment details, A/B method comparison table, FAQ, and JSON-LD).

If you prefer to provide any of the items in section 3 (e.g., Bloomberg blpapi link or a sample tick dataset), paste them and I’ll integrate them directly.

I’m ready to proceed the moment you say “Proceed — fetch sources” or supply one or more of the A/B-level docs above.

0 Comments

Leave a Comment