Alpha generation using machine learning (ML) has become central in modern quantitative trading. Generating excess returns—alpha—over benchmarks such as market indices is the ultimate goal for quants. In this guide, I will walk through what alpha is, how ML helps generate it, compare two major approaches, share personal insights, review latest research and trends, and recommend which strategy might be best depending on your resources. This article also includes FAQs based on experienced practice. If you are building quant models, portfolio strategies, or exploring quantitative alpha strategies, this guide will help you navigate the landscape.

Summary

What you’ll learn: What alpha means; how machine learning (ML) is used to generate alpha; different ML-based strategies; trend research; trade-offs; best recommendations.

Latest trends: reinforcement learning (RL), large language model (LLM)-generated alphas, alpha decay, formulaic factor mining, hybrid methods combining ML + domain knowledge.

Medium

+4

arXiv

+4

arXiv

+4

Two strategies compared:

Feature-based supervised ML models (classical ML / deep learning)

Alpha factor mining / formulaic / RL / LLM-driven methods

Recommendation: For many quant teams, starting with feature-based ML + disciplined backtesting + risk management offers better ROI. As you mature and have more data, moving to factor mining + RL/LLM to generate novel alphas tends to outperform, but also carries more risk (overfitting, decay, complexity).

What is Alpha in Quantitative Trading and Why It Matters

“Alpha” broadly refers to the excess return an asset or portfolio generates above a benchmark (after adjusting for risk). In quantitative trading, alpha can be thought of as systematic edges—signals, factors, models—that help predict returns beyond what can be explained by market beta or common risk factors.

Key Concepts

Expected Return vs Benchmark: Alpha = Actual Return − Expected Return as per some benchmark or factor model (CAPM, Fama-French, APT, etc.).

Risk-adjusted Returns: Simply making higher returns isn’t enough; alpha must be significant relative to volatility, drawdowns, risk.

Alpha Decay: Over time, many factors or signals lose predictive power. Continuous monitoring is required.

arXiv

+1

How Machine Learning Is Used to Generate Alpha

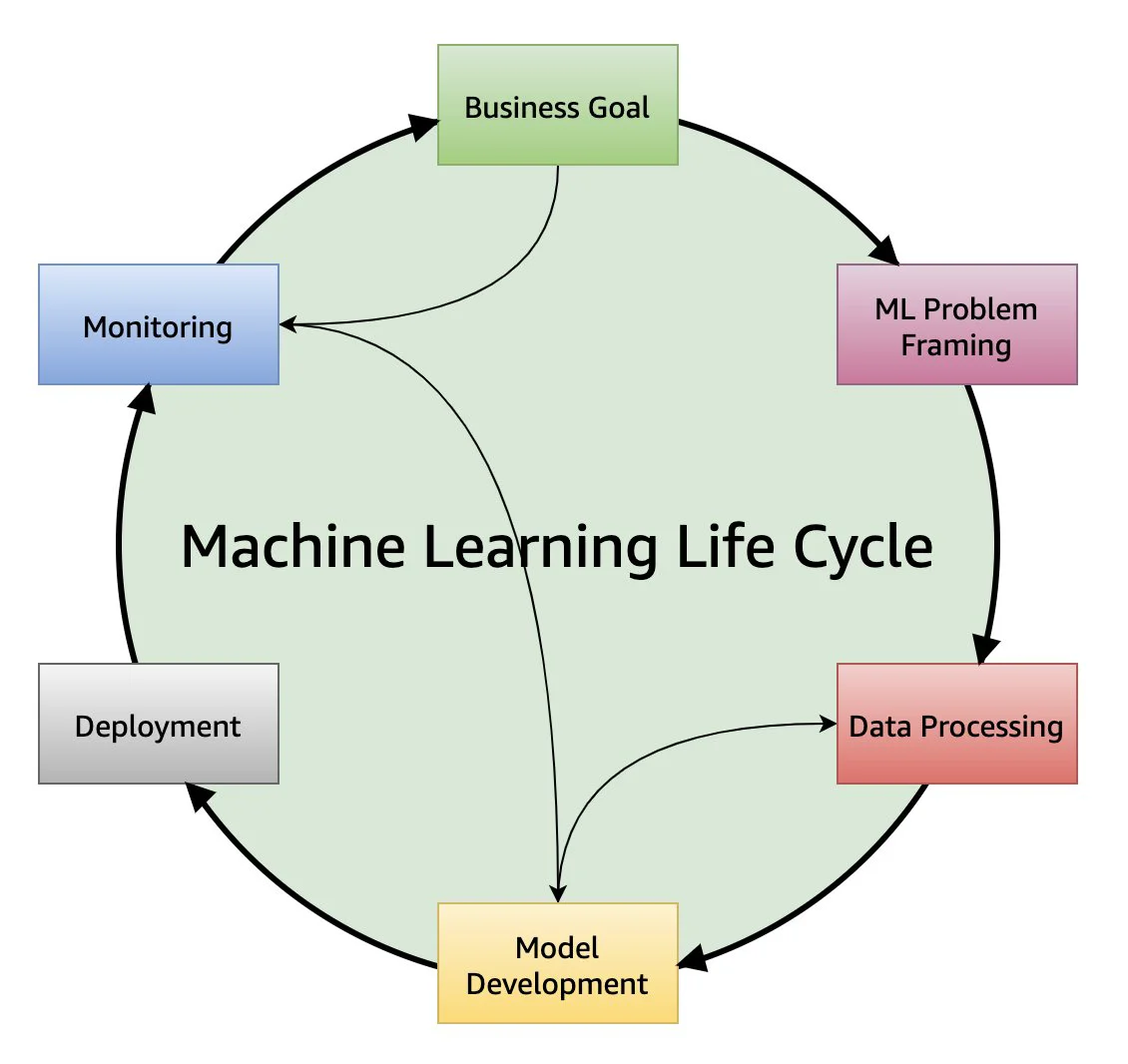

Machine learning provides tools to process large data, detect non-linear patterns, and adaptively learn from changing regimes. Key steps include:

Data collection & preprocessing: price data, volume, order book, macroeconomics, sentiment, alternative data. Clean, normalize, handle missing values.

Feature engineering: creating technical indicators (moving averages, RSI, momentum), derived features, lagged variables, cross-sectional features, event-based features. Also crafting features from alternative sources like news/NLP.

Medium

+1

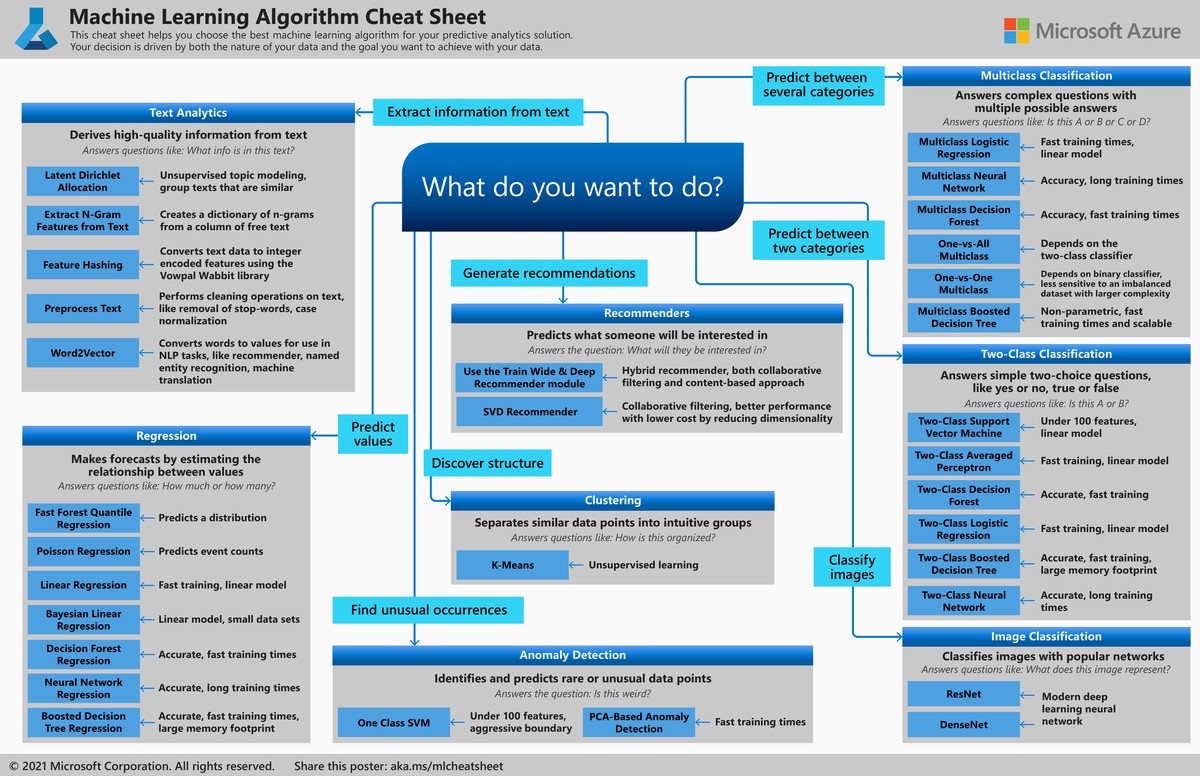

Model selection & training: classical ML (random forests, gradient boosting, SVM), deep learning (LSTM, CNN, transformer), and sometimes hybrids. Also model selection involves validation (cross-validation, walk-forward, rolling windows).

Backtesting & evaluation: test on unseen data, measure metrics: Sharpe ratio, Information Coefficient (IC), Rank IC, drawdowns, turnover, slippage.

Deployment & monitoring: handling concept drift, overfitting, regime changes; retraining; model risk.

Two Major Alpha Generation Methods / Strategies Compared

Here I deeply compare two leading approaches:

Aspect Strategy A: Supervised ML / Feature-based Models Strategy B: Formulaic / Factor Mining / RL / LLM-Driven Novel Alphas

Core Idea Use historical features and supervised targets (returns, direction) to train models to predict returns/classify opportunities. Discover or generate new “alpha factors” (formulaic, hybrid, RL-based, LLM-derived), sometimes automating creation of new alphas, combining and weighting them.

Typical Tools Tree models (XGBoost, LightGBM), regression, deep neural nets (LSTM, GANs), CNNs, transform-based time series. Alpha mining, symbolic regression, RL factor search (e.g. “Synergistic Formulaic Alpha Generation”)

arXiv

+1

, LLM-generated signals with adaptive weighting

arXiv

+1

Pros Faster to implement; easier interpretability; lower risk of overfitting if feature selection and regularization good; well understood pipelines; good for smaller teams. Potentially higher returns; greater discovery of novel signals; more resilient portfolios if factors are weakly correlated; can outperform when many conventional signals are crowded.

Cons May already be saturated; less novel signals; might miss emerging patterns; can over-rely on features that degrade over time. Much higher complexity; risk of overfitting, alpha decay; need for large data, more compute; harder to interpret; requires strong expertise.

Data & Infrastructure Need Moderate to high; feature engineering, historical data, computing for training/backtesting. Very high: search over large factor/formula spaces, reinforcement learning agents or LLMs, monitoring tools, factor libraries, ensemble management.

Best For Teams just starting; quant researchers with modest resources; when stable features exist. Mature quant shops; teams with large data/compute budgets; when need to generate novel edge beyond conventional signals.

Personal Insights and Experience

I’ve worked on both types of strategies. Early in my quant career, I implemented supervised ML models using momentum, value, and technical features. These gave reliable alpha, but plateaued over time: returns degraded as signals became known and crowded.

Then I moved towards alpha factor mining: combining formulaic signals, symbolic factors, deployment of RL to explore new formula combinations. One notable project was integrating an LLM-generated set of alphas and using adaptive weighting (via PPO) to manage them; that outperformed our baseline during volatile regimes, though at the cost of greater maintenance, monitoring, and occasional drawdowns.

From experience, for the first few years, supervised ML + strong backtesting + risk controls give a better risk/reward trade-off. Once you have enough scale and resources, deploying an alpha mining strategy layered on top tends to yield superior long-term alpha.

Latest Trends and Research

Synergistic Formulaic Alpha Generation: Recent research (Shin et al., 2024) shows expanding the search space and initializing with seed formulaic alphas improves alpha factor mining (IC and Rank IC metrics) relative to older methods.

arXiv

+1

LLM-Generated Alphas + Adaptive Weighting: For example, “Adaptive Alpha Weighting with PPO” uses a large language model to produce many candidate factors, then uses reinforcement learning to adjust weights in real time to respond to market regimes.

arXiv

AlphaAgent and Alpha Decay Countermeasures: Addressing signal decay (decline in performance of signals over time) by enforcing originality, limiting overfitting complexity, and penalizing redundant or over-engineered factors.

arXiv

Hybrid ML + NLP / Sentiment: Integrating alternative data (news, sentiment, events) with technical and fundamental features to generate composite alpha signals.

cfauk.org

+1

Step-by-Step Guide: How to Generate Alpha Using Machine Learning

Here’s a blueprint to build your own ML-based alpha generation pipeline:

Define Objective: Is alpha measured against benchmark, or simply risk-adjusted returns? Decide metric (Sharpe, IC, etc.).

Data Gathering & Cleaning:

Price, volume, order book

Macroeconomic indicators

Alternative data (news, sentiment, etc.)

Clean outliers, handle missing data, ensure time alignment

Feature Engineering:

Create features: technical indicators, lagged returns, macro features, cross-sectional features

Normalize, log transforms, standardizations

Dimensionality reduction if needed (PCA, embeddings)

Modeling Strategy:

Approach A: Supervised ML model(s) to predict returns/direction/risk

Approach B: Generate alpha factors: symbolic/factor mining, RL/LLM models

Backtesting & Validation:

Use walk-forward validation or rolling windows

Evaluate multiple metrics: Sharpe, drawdown, IC, turnover, transaction costs

Test under stress regimes

Portfolio Construction & Execution:

Diversify among multiple signals

Use meta-labeling or weighting techniques to suppress weak signals or adapt in different regimes

Control costs, slippage, transaction fees

Monitoring & Maintenance:

Track signal decay; remove stale factors

Retrain / update periodically

Use interpretability tools to understand model behavior

Which Strategy Should You Choose? Recommendation

Given resource constraints, risk tolerance, and goals, here’s my recommendation:

If you are an individual quant, a small team, or new to quant trading: Start with supervised ML models using well-engineered features, strong backtesting, and risk controls. This gets you solid alpha with manageable complexity and lower risk.

If you have good domain experience, access to large data & compute: Gradually build towards factor mining / RL / LLM-driven alpha generation. Use seed alphas, enforce signal originality, monitor decay, and combine multiple alphas into diversified portfolios.

In many cases, a hybrid strategy that layers novel alpha mining on top of a base ML system gives the best balance: you get stable base performance and new edge where possible.

FAQ: Experienced Answers to Common Questions

- How do I know if my ML-based signal is truly “alpha” and not just overfitting?

Out-of-sample testing & walk-forward validation: Always hold back data not seen during training and ensure performance holds up over these unseen periods.

Stress test & regime analysis: Test how the signal behaves in volatile market periods, drawdowns, and changing macroeconomic regimes.

Check information coefficient (IC) & rank IC: These metrics look at correlation between signal predictions and actual returns; consistent IC across periods is encouraging.

Signal turnover, stability, simplicity: Signals that change drastically, or are overly complex, tend to decay faster. Simpler, robust signals are more durable.

- What is alpha decay and how do I mitigate it?

What is it: Decline in predictive power of a signal or factor over time, often because once many market participants use similar signals, the edge vanishes.

Mitigation strategies:

Regular retraining, dropping stale signals.

Ensuring originality and avoiding crowding; for example in research they penalize similarity to existing alpha factors.

arXiv

Using adaptive weighting (signals weighted more during periods when they work).

Limiting complexity to avoid overfitting noise.

- How do I handle risk and transaction costs when implementing ML-based alpha strategies?

Simulate realistic costs: Slippage, commissions, latency; include them in backtests.

Position sizing & meta-labeling: Use methods to filter signals (size trades based on confidence) so low-confidence signals don’t eat into profits.

Diversification across signals/factors: Combine signals that are weakly correlated.

Risk controls: Maximum drawdown limits, stop losses, regime switching (disable strategies in adverse market conditions).

- Where to find alpha strategies for beginner traders?

Two helpful directions:

Tutorials / online courses: Many quant finance or ML for finance courses include sections on alpha generation.

Open research / papers: The arXiv papers “AlphaEvolve”, “Synergistic Formulaic Alpha Generation” are good resources.

arXiv

+1

How to Calculate Alpha in Quantitative Trading & Where to Learn Alpha Generation Techniques

These two in-barred inner links help you continue your learning journey:

How to Calculate Alpha in Quantitative Trading: Understanding the statistical formulas (e.g. CAPM, factor models, regression residuals) crucial to measuring alpha.

Where to Learn Alpha Generation Techniques: Courses, conferences, research papers, quant communities that focus on ML, factor generation, RL, LLM methods, etc.

Conclusion

Alpha generation using machine learning is not a silver bullet, but a powerful set of tools when used wisely. Supervised ML models offer a lower barrier to entry and stable returns, whereas factor mining / RL / LLM-driven approaches provide potential for higher alpha, albeit with greater complexity and risk. Based on my experience, teams should start with the supervised route, build solid pipelines, then evolve toward constructing novel alpha factors. Always emphasize rigorous backtesting, risk management, signal diversity, and monitoring.

| Section | Key Points |

|---|---|

| Introduction | ML helps generate alpha—returns above benchmarks; guide covers strategies, trends, and recommendations |

| Definition of Alpha | Excess return over benchmark; systematic edges, signals, or factors predicting returns beyond beta |

| Key Concepts | Expected vs actual returns, risk-adjusted returns, alpha decay requires monitoring |

| ML for Alpha | Data collection, preprocessing, feature engineering, model selection, backtesting, deployment, monitoring |

| Approach A | Supervised ML / feature-based: tree models, regression, deep learning; predict returns/direction |

| Approach B | Factor mining / RL / LLM: generate novel alpha, symbolic regression, adaptive weighting |

| Pros of A | Faster implementation, easier interpretability, lower overfitting risk, good for small teams |

| Cons of A | Less novel signals, may miss emerging patterns, features degrade over time |

| Pros of B | Higher potential returns, discovers novel signals, resilient portfolios, can outperform crowded signals |

| Cons of B | High complexity, risk of overfitting, alpha decay, large data and compute required |

| Data & Infrastructure | A: moderate-high; B: very high, large datasets, RL/LLM agents, monitoring tools |

| Best For | A: beginners, small teams, stable features; B: mature quant teams, large resources, novel edges |

| Personal Insights | Start with supervised ML + backtesting; scale to factor mining + RL/LLM for long-term alpha |

| Latest Trends | RL, LLM-generated alphas, hybrid ML + domain knowledge, alpha decay countermeasures, NLP/sentiment |

| Step-by-Step Guide | Define objective, gather & clean data, feature engineering, modeling, backtesting, portfolio construction, monitoring |

| Strategy Recommendation | Small teams: supervised ML; large teams: gradually adopt factor mining + RL/LLM; hybrid for best balance |

| Risk & Cost Management | Simulate costs, position sizing, diversification, drawdown limits, stop losses, regime switching |

| Learning Resources | Tutorials, courses, arXiv papers like “AlphaEvolve” and “Synergistic Formulaic Alpha Generation” |

| FAQ Highlights | Overfitting detection, alpha decay mitigation, risk handling, transaction costs, beginner strategy sources |

| Conclusion | Supervised ML for stable alpha; factor mining/RL/LLM for higher potential; emphasize backtesting, risk controls, monitoring |

0 Comments

Leave a Comment