=================================

Factor models are powerful statistical tools that help data scientists uncover hidden patterns, explain variability in large datasets, and build predictive models. Whether applied in finance, machine learning, or risk management, factor models for data scientists provide a framework to simplify complex data by reducing dimensions into interpretable factors. In this article, we’ll explore how factor models work, why they matter, different strategies to implement them, and practical applications. We’ll also compare methodologies, share real-world insights, and provide FAQs to guide practitioners.

Introduction to Factor Models

What Are Factor Models?

Factor models are statistical models that describe observed variables using a smaller number of latent (unobserved) variables called factors. These factors represent common influences that drive variation across datasets.

For example:

- In finance, factor models explain stock returns through drivers like market risk, size, and value.

- In data science, they help reduce dimensionality, similar to PCA (Principal Component Analysis), while retaining interpretability.

Why Factor Models Are Important for Data Scientists

Factor models bridge the gap between exploratory data analysis and predictive modeling. They:

- Simplify large datasets into manageable factors.

- Enhance interpretability of models.

- Improve prediction accuracy by filtering noise.

- Provide structure for risk management, portfolio optimization, and anomaly detection.

To illustrate, how to build a factor model involves defining factors (e.g., macroeconomic variables, sentiment indicators, or latent features), estimating factor loadings, and validating performance.

Types of Factor Models Used in Data Science

1. Statistical Factor Models

These rely on mathematical techniques to identify latent factors. Examples include:

- PCA (Principal Component Analysis): Extracts orthogonal factors that explain the most variance.

- Factor Analysis: Assumes latent constructs drive observable correlations.

Advantages:

- Data-driven, requiring no prior assumptions.

- Useful for dimensionality reduction.

Disadvantages:

- May lack interpretability.

- Sensitive to noise and scaling.

2. Fundamental Factor Models

These use predefined factors based on domain knowledge. In finance, for example, stock returns might be explained by factors such as P/E ratio, market capitalization, or industry classification.

Advantages:

- Intuitive and interpretable.

- Aligns with domain expertise.

Disadvantages:

- Risk of overfitting if too many factors are included.

- Limited adaptability to new data patterns.

3. Hybrid Factor Models

Combining statistical and fundamental approaches, hybrid models extract latent factors while incorporating domain-driven variables. These are increasingly popular in machine learning pipelines and advanced finance applications.

Advantages:

- Balance between interpretability and predictive power.

- More robust to outliers and market shifts.

Disadvantages:

- Higher complexity in construction and validation.

Comparing Different Factor Modeling Approaches

| Feature | Statistical Models (PCA, FA) | Fundamental Models (Domain-Driven) | Hybrid Models (Combined) |

|---|---|---|---|

| Interpretability | Low | High | Medium-High |

| Predictive Power | Medium | Medium | High |

| Data Dependency | High | Low-Medium | Balanced |

| Use Case | Exploratory analysis, ML pipelines | Finance, economics, portfolio risk | Quant finance, AI models |

Recommendation: For data scientists seeking both predictive power and interpretability, hybrid factor models are often the most effective.

Building Factor Models: Methodology

Step 1: Define Factors

Choose statistical or domain-driven factors. In finance, this could be “market risk” or “liquidity.” In machine learning, factors could represent latent embeddings.

Step 2: Estimate Factor Loadings

Use regression, maximum likelihood estimation, or machine learning algorithms to quantify how strongly each variable is influenced by a factor.

Step 3: Validate the Model

Employ cross-validation, out-of-sample testing, and how to evaluate factor model performance using metrics like R², Sharpe ratio (for finance), or prediction accuracy.

Step 4: Deploy and Monitor

Incorporate the model into pipelines, monitor drift, and update factors as new data arrives.

Practical Strategies for Data Scientists

Strategy 1: Using PCA for Dimensionality Reduction

PCA is a go-to statistical method for data scientists working with high-dimensional datasets. It identifies orthogonal factors that maximize explained variance.

Pros:

- Efficient for preprocessing ML tasks.

- Reduces overfitting in large datasets.

Cons:

- Lack of interpretability.

- Sensitive to scaling.

Strategy 2: Using Domain-Based Fundamental Factors

In specialized fields like finance or healthcare, factors derived from expert knowledge improve interpretability. For instance, in trading, factors such as momentum, value, and volatility explain returns.

Pros:

- Aligns with intuition and practical application.

- Easier to communicate to stakeholders.

Cons:

- Requires strong domain knowledge.

- Risk of outdated assumptions.

Recommended Approach

For most data scientists, the best practice is to combine PCA (statistical) with domain-based factors. This hybrid approach balances interpretability with predictive power, making it more robust across applications.

Applications of Factor Models for Data Scientists

- Finance – Stock return prediction, portfolio construction, risk attribution.

- Healthcare – Identifying latent health factors from patient data.

- Marketing – Segmenting customers based on behavioral factors.

- Machine Learning – Feature engineering and dimensionality reduction.

- Risk Management – Quantifying exposure to systemic risks.

For those interested, you can explore where to learn factor model applications in quantitative trading, machine learning, and academic courses.

Industry Trends in Factor Models

- AI Integration: Machine learning enhances factor discovery by automating feature extraction.

- Alternative Data: Sentiment analysis, ESG scores, and satellite imagery are becoming new factors.

- Explainable AI (XAI): Ensures factor models remain interpretable while leveraging complex algorithms.

- Real-Time Factor Models: Cloud computing allows factor updates on streaming data.

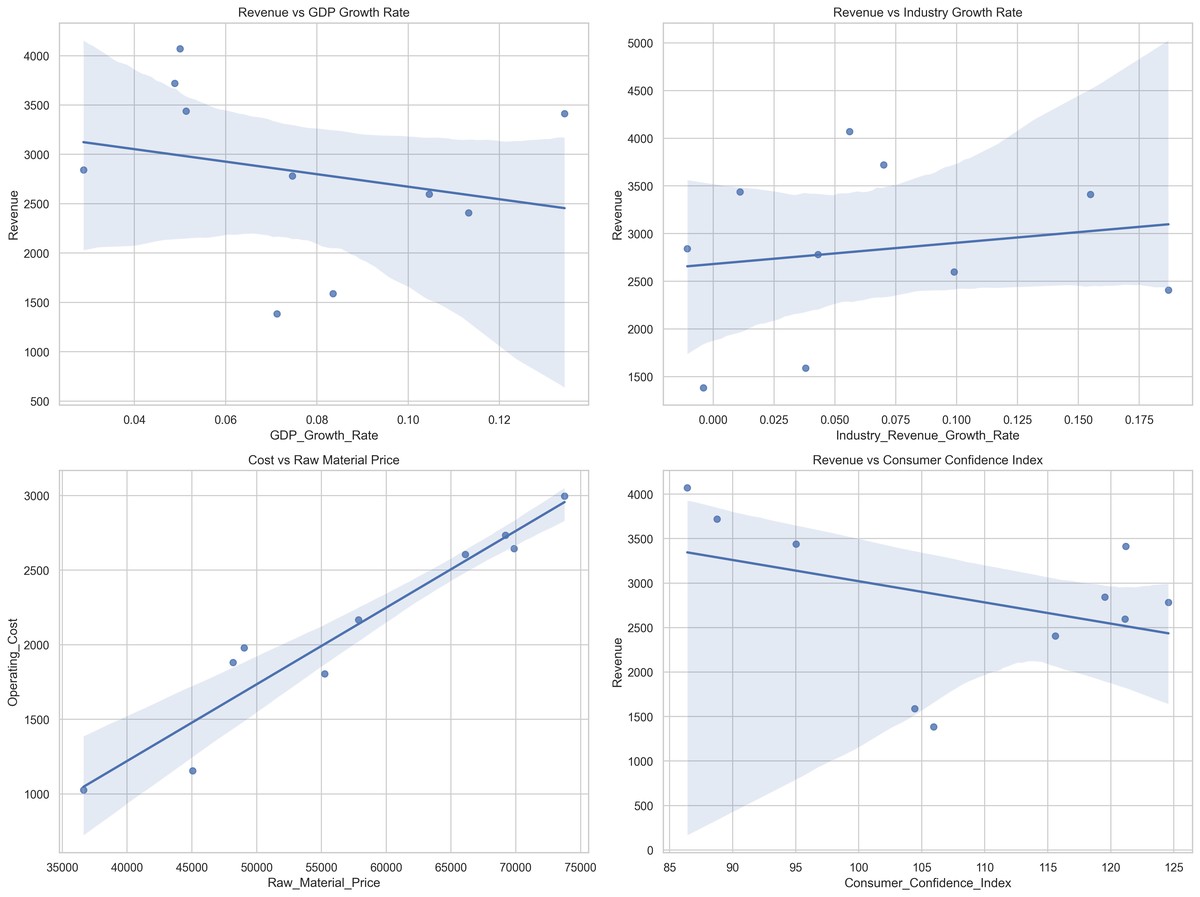

Visual Insights

PCA reduces dimensions by extracting orthogonal factors that explain maximum variance.

Factor models decompose returns or data variability into systemic factors and idiosyncratic noise.

Frequently Asked Questions (FAQ)

1. Why use factor models instead of traditional regression?

Factor models simplify high-dimensional datasets by grouping correlated variables into latent factors. Unlike standard regression, which may overfit, factor models reduce noise and improve generalization.

2. How do factor models predict markets?

In finance, factor models explain returns through market-wide and stock-specific drivers. For example, a three-factor model might use market risk, company size, and value exposure to predict performance. This structured approach improves robustness compared to ad-hoc regressions.

3. What skills do data scientists need to build factor models?

Key skills include:

- Statistical modeling (PCA, regression, factor analysis).

- Programming (Python, R, SQL).

- Domain knowledge (finance, healthcare, marketing).

- Model evaluation techniques (cross-validation, performance attribution).

Conclusion

Factor models for data scientists are indispensable for extracting insights from complex datasets. Whether used for dimensionality reduction, financial modeling, or risk management, they provide a structured framework to simplify analysis while boosting interpretability and predictive performance.

By blending statistical methods like PCA with domain-driven factors, data scientists can achieve the best balance of power and clarity.

👉 Have you built a factor model in your work? Share your experiences in the comments, and let’s discuss how data scientists can push the boundaries of factor modeling further!

0 Comments

Leave a Comment